Install DB Proxy with Example Schema

To help contextualize the encryption operations, an example schema and dataset are provided for users who wish to follow the guide exactly. Alternatively, you can use your own application database, but you will need to configure it as described in the Deployment section.

This guide assumes that you have chosen to install the provided upstream PostgreSQL database, referred to as "existing infrastructure," which comes with an example dataset. This database is intended to help you explore the functionality of the Antimatter Database Proxy.

Installation

This guide offers two methods for installing the example database. The method you choose should align with the installation approach you selected during Deployment. The available methods are Kubernetes manifest and Docker Compose.

Kubernetes

Below is a minimal Kubernetes manifest for deploying a pod running the example database. Additionally, a modified configuration file for your Kubernetes deployment is provided in the Deployment section. There should be no need to modify the provided Kubernetes manifest; you only need to update the configuration file as required.

This guide assumes that the example database is installed in the same namespace as the Antimatter database proxy.

Configuration file

The following settings will need to be used for the proxy and upstream databases, however, the domain ID, API key, write context and read context will still need to be provided.

cat > /tmp/antimatter/intercept.yaml <<EOF

encryption-parameters:

domain-id: <Antimatter domain ID>

api-key: <Antimatter domain ID's API key>

write-context: <valid in domain write-context name>

read-context: <valid in domain read-context name>

api-url: "https://api.antimatter.io"

proxy:

credentials:

address: "db-proxy-service.default.svc.cluster.local"

port: "5432"

username: "root"

password: "password"

upstream:

credentials:

address: "infra-service.default.svc.cluster.local"

port: "5432"

username: "postgres"

password: "postgres"

databases:

- "employees"

EOF

Kubernetes manifest

The following Kubernetes manifest is aligned with the configuration file provided here. You should only need to apply this file.

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: infra-data-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: infra

spec:

replicas: 1

selector:

matchLabels:

app: infra

template:

metadata:

labels:

app: infra

spec:

containers:

- name: infra

image: infra:test

ports:

- containerPort: 5432

env:

- name: POSTGRES_USER

value: "postgres"

- name: POSTGRES_PASSWORD

value: "postgres"

- name: POSTGRES_DB

value: "postgres"

volumeMounts:

- name: infra-data

mountPath: /var/lib/postgresql/data

readinessProbe:

exec:

command: ["pg_isready", "-U", "postgres", "-h", "127.0.0.1", "-p", "5432"]

initialDelaySeconds: 5

periodSeconds: 3

timeoutSeconds: 1

successThreshold: 1

failureThreshold: 10

volumes:

- name: infra-data

persistentVolumeClaim:

claimName: infra-data-pvc

---

apiVersion: v1

kind: Service

metadata:

name: infra-service

spec:

type: NodePort

ports:

- port: 5432

targetPort: 5432

nodePort: 30434

selector:

app: infra

Docker

Below is a minimal Docker Compose file to deploy a container running the example database. A modified configuration file for your Docker Compose setup is also provided in the Deployment section. There should be no need to modify the provided Docker Compose file; you only need to update the configuration file as needed.

Configuration file

cat > /tmp/antimatter/intercept.yaml <<EOF

encryption-parameters:

domain-id: <Antimatter domain ID>

api-key: <Antimatter domain ID's API key>

write-context: <valid in domain write-context name>

read-context: <valid in domain read-context name>

api-url: "https://api.antimatter.io"

proxy:

credentials:

address: "engine"

port: "5432"

username: "root"

password: "password"

upstream:

credentials:

address: "infra"

port: "5432"

username: "postgres"

password: "postgres"

databases:

- "employees"

EOF

Docker compose contents

The following Docker Compose file is aligned with the configuration file provided here. You should only need to run docker-compose -f <path-to-file> up infra.

version: '3.8'

services:

infra:

image: infra:test

container_name: infra

profiles: ["full"]

ports:

- "8434:5432"

environment:

POSTGRES_USER: postgres

POSTGRES_PASSWORD: postgres

POSTGRES_DB: postgres

volumes:

- infra_data:/var/lib/postgresql/data

healthcheck:

test: [ "CMD-SHELL", "pg_isready -U postgres -h 127.0.0.1 -p 5432" ]

interval: 3s

timeout: 1s

retries: 10

volumes:

infra_data:

Exploring the example database

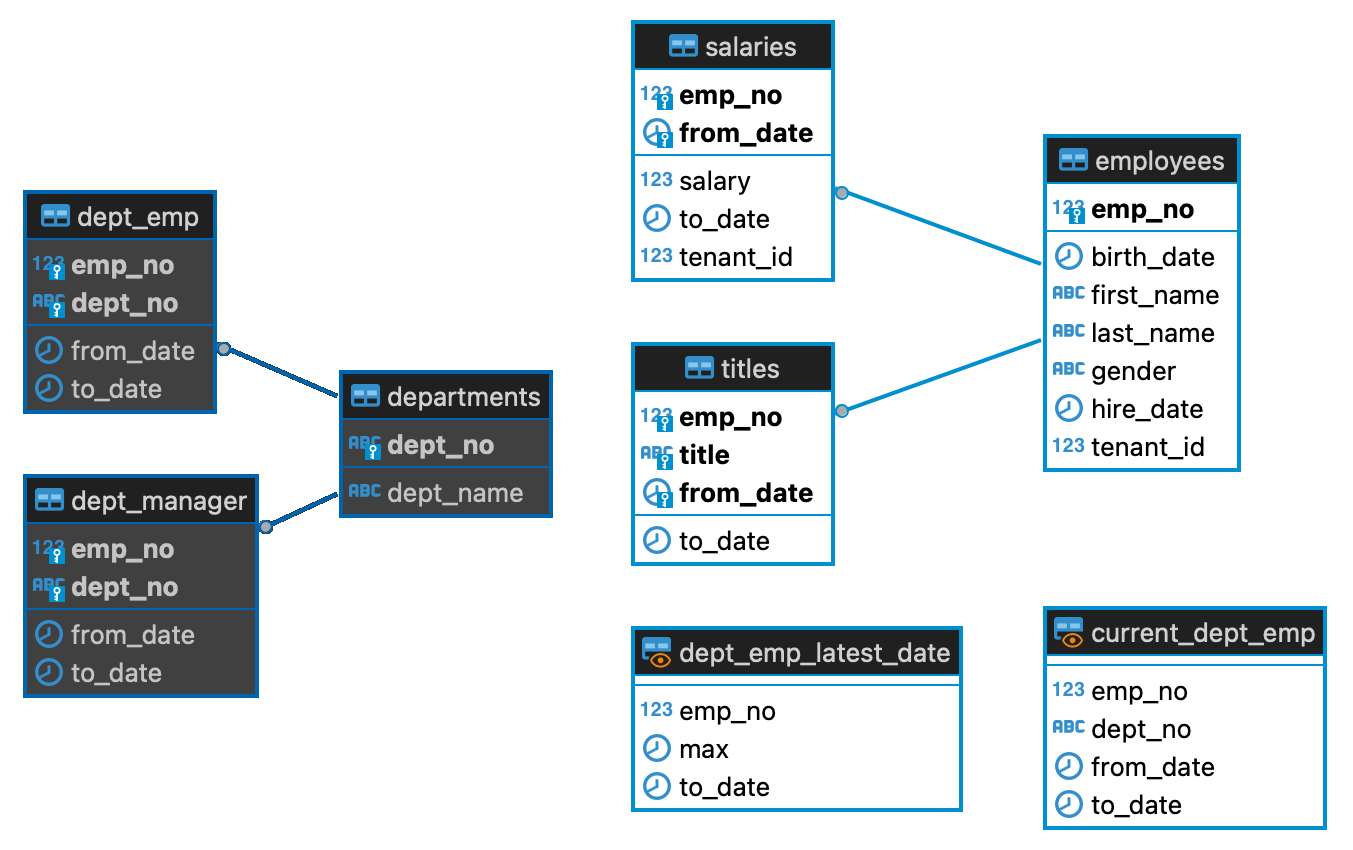

The example external infrastructure includes a PostgreSQL database called employees, which holds information about the staff employed by a fictitious company. This database contains both sensitive and non-sensitive information, such as:

- The names and birthdays of employees.

- A full history of salaries associated with all employees of the company.

- The departments within the company along with their current managers.

- A full history of titles for all employees of the company.

The schema of the employees database is illustrated below:

If you would like to explore the schema, you can connect to your target database directly and do so. If you do, you will notice that nothing Antimatter-specific has been done to it yet.

Connecting to the database proxy

Here are some helper commands that utilize psql to connect to the example database.

Kubernetes

First, obtain the pod name:

POD_NAME=$(kubectl get pods -l app=db-proxy-app -o custom-columns=NAME:.metadata.name --no-headers | tr -d '\n')

Next, port-forward the database proxy's intercept listening port to enable a connection:

kubectl port-forward $POD_NAME 54322:54322

Finally, connect to the database:

PGPASSWORD="postgres" psql --host localhost --username "postgres" --port 54322 employees

Docker compose

To establish a connection, use the following command:

PGPASSWORD="postgres" psql --host localhost --username "postgres" --port 32432 employees

You can also connect to the proxy through the toolbox pod by using the release name and namespace of the database proxy:

kubectl exec -it -n ${CORE_NAMESPACE} ${CORE_RELEASE}-toolbox-0 -- psql postgresql://${DBUSER}:${DBPASS}@${DBPROXY_RELEASE}-database-encryption/employees

Testing without encryption

Next we test that the mirroring is working as expected by executing a number of simple queries. The objective of these examples is to validate that the proxy does not reduce the functionality seen with a direct connection.

- Start with a simple lookup that includes a few conditionals:

SELECT salaries.emp_no, salaries.from_date, salaries.salary

FROM salaries

WHERE tenant_id = 1 AND emp_no = 10007 AND salary > 70000;

The objective of this query is to confirm that the connection to the upstream database is operating as intended. If successful, the query response should be:

emp_no | from_date | salary

--------+------------+--------

10007 | 1996-02-09 | 70220

10007 | 1997-02-08 | 73362

10007 | 1998-02-08 | 75582

10007 | 1999-02-08 | 79513

10007 | 2000-02-08 | 80083

10007 | 2001-02-07 | 84456

10007 | 2002-02-07 | 88070

(7 rows)

- Next, increase the query complexity, adding additional components to again satisfy that the connection is working as intended.

SELECT employees.emp_no, employees.first_name, employees.last_name, AVG(salaries.salary) as average

FROM employees

INNER JOIN salaries ON employees.emp_no = salaries.emp_no

WHERE employees.tenant_id = 1

GROUP BY employees.emp_no, employees.first_name, employees.last_name

ORDER BY average

LIMIT 10;

The expected result is:

emp_no | first_name | last_name | average

--------+------------+------------+--------------------

10152 | Jaques | Munro | 42161.000000000000

10020 | Mayuko | Warwick | 43278.200000000000

10045 | Moss | Shanbhogue | 44555.666666666667

10139 | Ewing | Foong | 45351.000000000000

10116 | Dayanand | Czap | 46353.200000000000

10109 | Mariusz | Prampolini | 47531.400000000000

10118 | Zhonghui | Zyda | 47831.181818181818

10023 | Bojan | Montemayor | 49438.333333333333

10191 | Zdislav | Nastansky | 51241.705882352941

10134 | Diederik | Siprelle | 55202.066666666667

(10 rows)

- Finally run the following query:,

SELECT employees.emp_no, employees.first_name, employees.last_name, AVG(salaries.salary) AS average

FROM employees

INNER JOIN salaries ON employees.emp_no = salaries.emp_no

WHERE employees.tenant_id = 1

GROUP BY employees.emp_no, employees.first_name, employees.last_name

HAVING AVG(salaries.salary) > 70000

ORDER BY average

LIMIT 10;

You should observe the following result set:

emp_no | first_name | last_name | average

--------+------------+------------+--------------------

10007 | Tzvetan | Zielinski | 70826.714285714286

10074 | Mokhtar | Bernatsky | 71720.750000000000

10194 | Josyula | Hofmeyr | 73190.333333333333

10093 | Sailaja | Desikan | 75088.500000000000

10070 | Reuven | Garigliano | 75165.588235294118

10099 | Valter | Sullins | 83901.714285714286

(6 rows)

Introducing data encryption

With the proxy now established and confirmed to work with simple DML operations, the next step is to encrypt one of the tables and observe the effects in both the proxy and the upstream database. For this demonstration, the salaries table from the example database will be used. Start by selecting the salaries table and marking three of its columns for encryption.

- A valid Antimatter domain.

- A valid peer domain configured as a subordinate domain.

- The subordinate peer domain's nickname. For this example, we will assume the name is

customer1.

To perform encryption-related operations, the intercept must be in a state that allows it to accept encrypted commands. There are two ways to achieve this:

- Set the current transaction to be an encrypted transaction block.

- Set the session to be an encrypted session.

The advantage of an encrypted transaction is that the peer nickname used is only valid within that transaction. An encrypted transaction block is defined as a transaction where the very first query after BEGIN is a request to inform the proxy which nickname should be used for cryptographic operations within the block. The format for this is SET antimatter.opts='nickname=<EXTERNAL_ID>'; and is used as follows:

BEGIN;

SET antimatter.opts='nickname=tenant_a';

<operations in this block will now be encrypted/decrypted>

COMMIT;

An encrypted session is more flexable and can be entered at any point outside a transaction. Once entered, the session is considered to be encrypted until reverted back to plaintext. An encrypted transaction can be started within an encrypted session using the process described above. In this case, the external id and compartment information supplied will be in scope for the body of the transaction. Once complete, scope will revert back to the session level external ID and compartment.

Note: any transaction entered into from an encrypted session will be considered encrypted regardless of whether they begin with the SET command. If no SET was provided, or the supplied external id is invalid, the id used will default to the one supplied at the session level.

To introduce encryption to a table using an encrypted transaction, run the following on the proxy psql client:

BEGIN;

SET antimatter.opts='nickname=customer1';

SELECT ANTIMATTER('ALTER TABLE salaries ADD ENCRYPTION COLUMNS

salary DECRYPT ERROR RETURNS -1 REPLACE ORIGINAL 0,

to_date DECRYPT ERROR RETURNS "1900-01-01"');

COMMIT;

Now that the table has been encrypted, if the example application is being used, all queries described in the above testing section should be unaffected by this change, feel free to validate this.

Inserting into an encrypted table

Now that a table has been marked for encryption, it's possible to begin inserting both encrypted and non-encrypted data. To observe the impact of introducing encrypted data into the upstream database, perform an INSERT operation on the encrypted table within an encrypted transaction block. In this example, a new record will be added for an employee with emp_no 10099 and a salary that is one greater than the current highest salary.

BEGIN;

SET antimatter.opts='nickname=customer1';

INSERT INTO salaries (tenant_id, emp_no, from_date, to_date, salary) VALUES

(

1, 10099, '2022-08-19', '2025-01-1',

(

SELECT * FROM

(

SELECT max(salaries.salary) + 1 as greatest_salary

FROM salaries

) AS subq

)

);

COMMIT;

Next, run a similar INSERT operation, but this time without using an encrypted transaction. This will result in the data being inserted into the table without encryption. Additionally, the new maximum salary, which was previously inserted as an encrypted item, will not be included in the calculation since it won't be decrypted outside of an encrypted session:

INSERT INTO salaries (tenant_id, emp_no, from_date, to_date, salary) VALUES

(

1, 10099, '2022-08-20', '2025-01-1',

(

SELECT * FROM

(

SELECT max(salaries.salary) + 1 as greatest_salary

FROM salaries

) AS subq

)

);

Next, fetch the records that were just inserted, along with the first and last name of the employee with emp_no 10099:

BEGIN;

SET antimatter.opts='nickname=customer1';

SELECT first_name, last_name, from_date, to_date, salary

FROM salaries

INNER JOIN employees ON salaries.emp_no = employees.emp_no

WHERE salaries.emp_no = 10099

AND from_date >= '2022-08-17'

AND to_date = '2025-01-01';

COMMIT;

This will return the recently inserted record:

first_name | last_name | from_date | to_date | salary

------------+-----------+------------+------------+--------

Valter | Sullins | 2022-08-19 | 2025-01-01 | 113230

Valter | Sullins | 2022-08-20 | 2025-01-01 | 113230

(2 rows)

The above command was performed from within an encrypted transaction block. If this was performed outside a transaction or from within a 'normal' transaction, the server will not be able to view the salary value for the record with from_date '2022-08-19'. To validate this, run:

SELECT first_name, last_name, from_date, to_date, salary

FROM salaries

INNER JOIN employees ON salaries.emp_no = employees.emp_no

WHERE salaries.emp_no = 10099

AND from_date >= '2022-08-17'

AND to_date = '2025-01-01';

This should only return one record, the one inserted outside of the encryption block:

first_name | last_name | from_date | to_date | salary

------------+-----------+------------+------------+--------

Valter | Sullins | 2022-08-20 | 2025-01-01 | 113230

(1 rows)

Now, to validate that the encryption component is functioning correctly, switch to a different customer. This can be done using either the ANTIMATTER USING command or the SET command:

SET antimatter.opts='nickname=customer2';

SELECT first_name, last_name, from_date, to_date, salary

FROM salaries

INNER JOIN employees ON salaries.emp_no = employees.emp_no

WHERE salaries.emp_no = 10099

AND from_date >= '2022-08-17'

AND to_date = '2025-01-01';

This should only return one record:

first_name | last_name | from_date | to_date | salary

------------+-----------+------------+------------+--------

Valter | Sullins | 2022-08-20 | 2025-01-01 | 113230

(1 row)

This is because the proxy is unable to decrypt the dates associated with the recently inserted encrypted record and so only returns the non-encrypted record. We can see this effect better by fetching all salary records for our employee:

SELECT first_name, last_name, from_date, to_date, salary

FROM salaries

INNER JOIN employees ON salaries.emp_no = employees.emp_no

WHERE salaries.emp_no = 10099;

Looking at the results, we see that the second to last record in the list has the to_date and the salary fields set to their default values while the last record is in plaintext:

first_name | last_name | from_date | to_date | salary

------------+-----------+------------+------------+--------

Valter | Sullins | 1988-10-18 | 1989-10-18 | 68781

Valter | Sullins | 1989-10-18 | 1990-10-18 | 70711

Valter | Sullins | 1990-10-18 | 1991-10-18 | 75094

Valter | Sullins | 1991-10-18 | 1992-10-17 | 78490

Valter | Sullins | 1992-10-17 | 1993-10-17 | 81154

Valter | Sullins | 1993-10-17 | 1994-10-17 | 81480

Valter | Sullins | 1994-10-17 | 1995-10-17 | 85032

Valter | Sullins | 1995-10-17 | 1996-10-16 | 84698

Valter | Sullins | 1996-10-16 | 1997-10-16 | 86038

Valter | Sullins | 1997-10-16 | 1998-10-16 | 86212

Valter | Sullins | 1998-10-16 | 1999-10-16 | 89257

Valter | Sullins | 1999-10-16 | 2000-10-15 | 93297

Valter | Sullins | 2000-10-15 | 2001-10-15 | 95842

Valter | Sullins | 2001-10-15 | 9999-01-01 | 98538

Valter | Sullins | 2022-08-19 | | 0

Valter | Sullins | 2022-08-20 | 2025-01-01 | 113230

(16 rows)

Migrating existing data

By default, encrypted tables do not modify existing data and only affect future records. Support for migrating existing records to the encrypted format is done through the ANTIMATTER MIGRATE function call. As an example, let us migrate all salaries and end dates for user Itzchak Lichtner. To begin, fetch the records so that we have something to compare against:

BEGIN;

SET antimatter.opts='nickname=customer1';

SELECT salaries.tenant_id, first_name, last_name, from_date, to_date, salary

FROM salaries

INNER JOIN employees ON employees.emp_no = salaries.emp_no

WHERE first_name = 'Itzchak' AND last_name = 'Lichtner';

COMMIT;

This should return the following when executed in the psql client:

tenant_id | first_name | last_name | from_date | to_date | salary

-----------+------------+-----------+------------+------------+--------

5 | Itzchak | Lichtner | 1992-12-12 | 1993-12-12 | 90741

5 | Itzchak | Lichtner | 1993-12-12 | 1994-12-12 | 94967

5 | Itzchak | Lichtner | 1994-12-12 | 1995-12-12 | 96726

5 | Itzchak | Lichtner | 1995-12-12 | 1996-12-11 | 97680

5 | Itzchak | Lichtner | 1996-12-11 | 1997-12-11 | 98971

5 | Itzchak | Lichtner | 1997-12-11 | 1998-12-11 | 101395

5 | Itzchak | Lichtner | 1998-12-11 | 1999-12-11 | 103978

5 | Itzchak | Lichtner | 1999-12-11 | 2000-12-10 | 107356

5 | Itzchak | Lichtner | 2000-12-10 | 2001-12-10 | 107956

5 | Itzchak | Lichtner | 2001-12-10 | 9999-01-01 | 109501

(10 rows)

To encrypt these salary records, use the ANTIMATTER MIGRATE function call, which is modeled after the standard PostgreSQL UPDATE syntax. Run the following commands on the proxy client to perform the encryption operations:

BEGIN;

SET antimatter.opts='nickname=customer1';

SELECT ANTIMATTER('UPDATE TABLE salaries ENCRYPT salary, to_date

FROM employees

WHERE employees.emp_no = salaries.emp_no

AND first_name = "Itzchak"

AND last_name = "Lichtner"');

COMMIT;

Once the migration is complete, re-run the query from the beginning of this section to confirm that the result remains unchanged. To observe the effect of the migration, connect to the target upstream database and rerun the original SELECT statement. The results should match those presented here:

tenant_id | first_name | last_name | from_date | to_date | salary

-----------+------------+-----------+------------+---------+--------

5 | Itzchak | Lichtner | 1992-12-12 | | 0

5 | Itzchak | Lichtner | 1993-12-12 | | 0

5 | Itzchak | Lichtner | 1994-12-12 | | 0

5 | Itzchak | Lichtner | 1995-12-12 | | 0

5 | Itzchak | Lichtner | 1996-12-11 | | 0

5 | Itzchak | Lichtner | 1997-12-11 | | 0

5 | Itzchak | Lichtner | 1998-12-11 | | 0

5 | Itzchak | Lichtner | 1999-12-11 | | 0

5 | Itzchak | Lichtner | 2000-12-10 | | 0

5 | Itzchak | Lichtner | 2001-12-10 | | 0

(10 rows)